LumiModeling

Project:

Advisors:

Date:

Keywords:

A Gaussian Splatting-Based Tool for Recreating Dynamic Material and Lighting Interaction in Architecture

Takehiko Nagakura, Bill Freeman

2025.5

3D Reconstruction, Gaussian Splatting, Computer Vision

This thesis implements a Gaussian Splatting(GS)-based tool to simulate the dynamic interplay between materiality and lighting in architectural spaces, enabling real-time analysis of surface behavior under varying environmental and illumination conditions.

Background

Figure 1: Renderings of Ronchamp © yane markulev

Lighting plays a fundamental role in shaping architectural atmosphere, influencing how spaces are perceived and experienced. However, current digital design tools often fall short in capturing the dynamic interplay between light, material, and space, especially under real-world and time-varying conditions. Right now, while the modeling + rendering pipeline can produce photorealistic results, it might still loss details of information compared with 3D scanning.

Research Idea

This problem leads to my thesis, which implements a Gaussian Splatting (GS)-based tool to simulate the dynamic interplay between materiality and lighting in architectural spaces, enabling real-time analysis of surface behavior under varying environmental and illumination conditions.

Conclusion

In summary, this research demonstrates that relightable GS extends architectural analysis beyond traditional tools, capturing subtle interactions between material, light, and environment. It is not a design tool yet since it relies on real-world image capture. However, since the technique is still emerging rapidly, this can be further integrated into the design process so that architects can make more informed decisions regarding materiality and lighting.

.png)

Figure 2: From 2D to 4D reconstruction

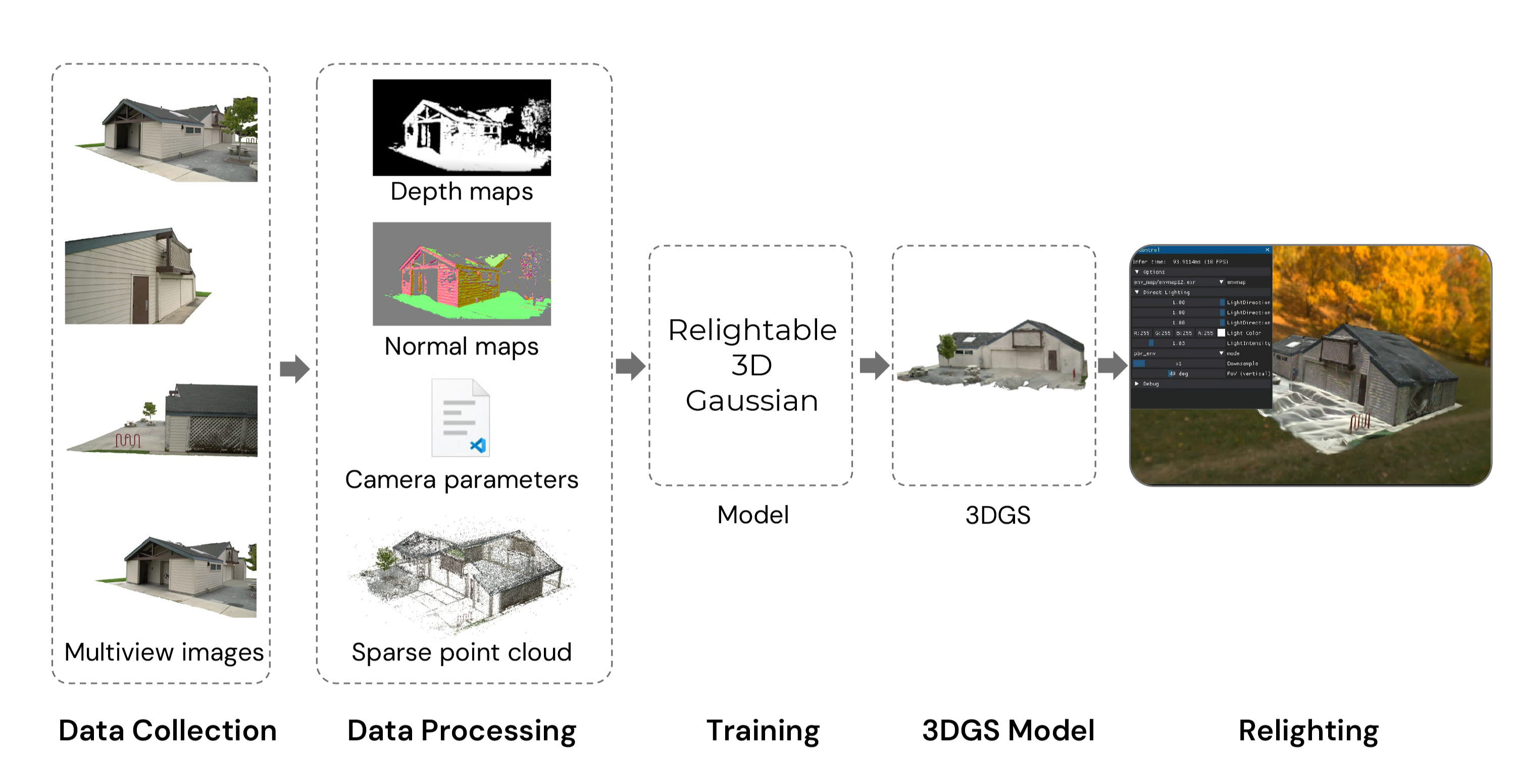

Pipeline

The technical part of this research is based upon the model in this paper: Relightable 3D Gaussian: Real-time Point Cloud Relighting with BRDF Decomposition and Ray Tracing[1]. This work innovates on traditional 3D Gaussian techniques by incorporating additional properties such as normal vectors, BRDF parameters, and incident lighting from various directions. These enhancements facilitate the accurate recovery of geometry and materials through inverse rendering techniques, address complex occlusions with point-based ray tracing, and enable dynamic relighting of the scene.

Figure 3: Pipeline

Implementation

These relighting experiments under various environmental conditions illustrated the model's robust capability to adapt to dynamic lighting changes.

Figure 5: Relighting results

.png)

An user interface that allows real-time visualization and interaction with the 3D Gaussian Splat model is further developed. This interface enables users to manipulate the model—dragging, scaling, moving, rotating—and observe how it responds to different lighting conditions, ultimately achieving a dynamic 4D model.

Figure 6: Interactive GUI

The technical workflow comprises:

1. Data Collection: Acquiring multi-view images from an architectural site.

2. Data Processing: Converting images into RGB, depth maps, normal maps, and extracting camera parameters.

3. Model Training: Training a Gaussian Splat representation from captured data.

4. Relighting: Applying ray tracing techniques to simulate lighting variations.

5. Visualization – Implementing a real-time rendering GUI.

Using a dataset of 300 images of a barn, the reconstruction process was completed in 20 minutes on an RTX 4090 GPU. The resulting Gaussian splat representation encoded key surface attributes such as color, opacity, depth, and normal vectors, enabling precise material rendering. Once reconstructed, the model undergoes relighting via environment maps.

Figure 4: 3DGS reconstruction result

Expanding to Real Sites

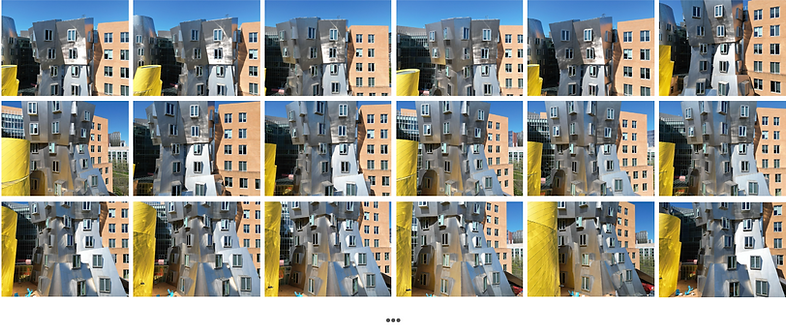

Figure 7: The Stata Center Dataset

To test this pipeline in real architectural sites, the Stata Center is chosen for its complex geometry and metal cladding. The images are captured using a DJI drone on a sunny day to ensure comprehensive multi-view coverage. 290 images in the dimensions of 4032,3024 are collected at varying elevations and angles.

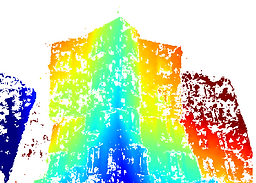

Using COLMAP (Schönberger & Frahm, 2016), a general-purpose Structure-from-Motion (SfM) and Multi-View Stereo (MVS) pipeline with a graphical and command-line interface, camera intrinsics and extrinsics are recovered. Vis-MVSNet (Zhang et al., 2020), a multi-view stereo framework, is used to generate filtered depth maps. Each RGB image is resized to a dimension of 1280,960 for faster computation. Masks are created using CVAT6, and per-pixel normal maps are computed through a Python preprocessing pipeline written by the author .

Figure 8: RGB image with its corresponding depth map and normal map

The training proceeds in two stages:

1. 3DGS stage (30k iterations): Generates base geometry and appearance

2. NEILF relighting stage (50k iterations): Adds directional lighting and BRDF support

The output is a high-fidelity GS scene capable of rendering at interactive rates.

In the second stage, the NEILF mode is performed using the pretrained GS model in the first stage. This stage adds:

1. BRDF decomposition to extract diffuse and specular reflectance

2. Lighting environment estimation from image cues

3. Ray-traced visibility to compute occlusions and simulate hard shadows

First stage training result

Second stage training result

Second stage training result

Benchmarking shows that each relighting update renders under one second per frame on an NVIDIA L20 GPU, enabling near real-time performance. This latency is low enough to support interactive tasks such as iterative material evaluation and lighting design exploration.

Qualitative tests demonstrate that the tool preserves key material properties, such as glossiness, translucency, and specular response, under varying light conditions. However, one current limitation is the static behavior of hard shadows, which do not fully respond to changes in light direction due to the baked occlusion structure inherent in the GS representation.

Figure 9: Relighting results

User Study

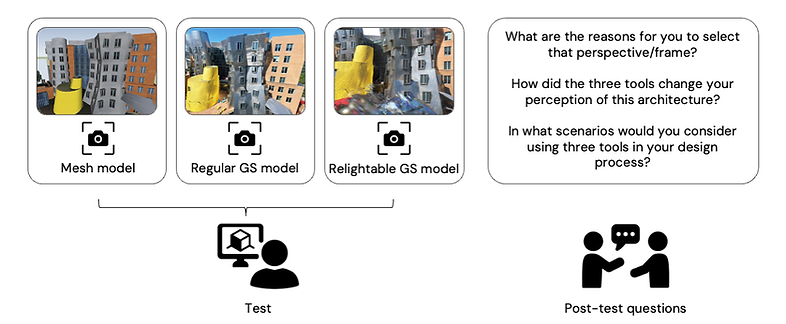

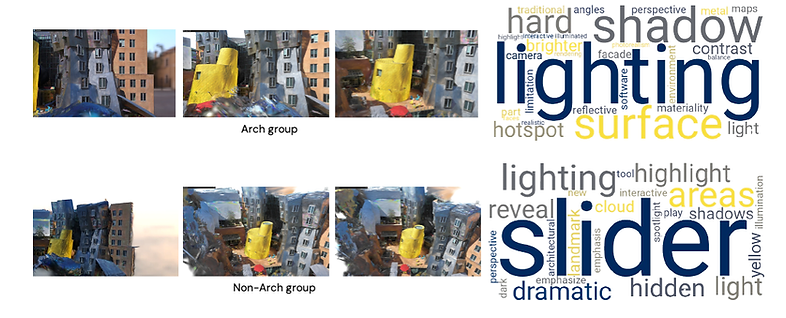

A user study compares three distinct digital modeling approaches to study how different visualization tools influence architectural perception and decision-making. The objective is to assess the perceptual outcomes of each tool and the potential of relightable GS as a design support system.

The study involved the following visualization methods:

1. A traditional mesh model8 (from open-source assets)

2. A standard GS viewer (Luma AI-based)

3. A relightable GS viewer (developed in this thesis)

Figure 10: User study setup

The user study further demonstrates that architects shift their focus as the visualization tool changes. In mesh models, users prioritize form and geometry; in GS, attention shifts to surface materiality; and with relightable GS, designers begin to consider lighting dynamics and contrast. This progression suggests that increased visual realism facilitates deeper architectural analysis.

Figure 11: Mesh viewer's results

Figure 12: Common GS viewer's results

Figure 13: Relightable GS viewer's results

_edited.png)